Anthos has been bouncing around the IT industry since the launch of the service by Google in April 2019.

On launch at the Google Cloud Next conference in 2019, Google CEO Sundar Pichai announced the idea behind creating Anthos was to allow developers to “write once and run anywhere”.

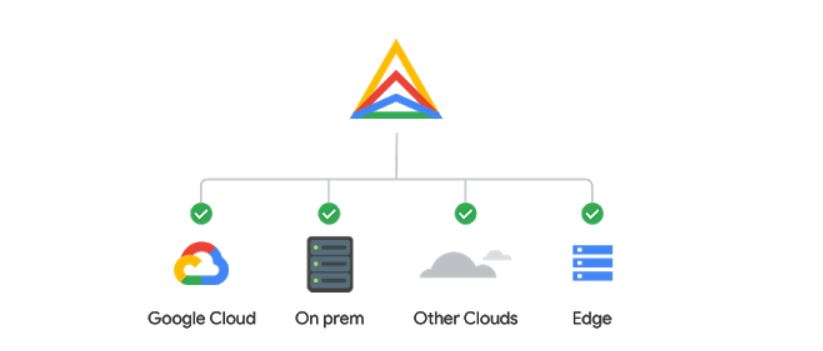

Anthos promised to simplify the development, deployment, and operation of containerised applications across multiple different cloud providers by “bridging” incompatible cloud architectures.

This technology, when announced, created a whirlwind of excitement for cloud fanatics, such as myself; Google Cloud promised its customers a way to run Kubernetes workloads on other environments such as Amazon Web Services (AWS), Microsoft Azure, Google Cloud Platform (GCP) and on-premise (VMWare). Anthos support for AWS and Microsoft Azure was released in April 2020 and December 2021 respectively.

You may be asking “how about bare metal machines?”. Of course, there are scenarios where physical Bare Metal Servers are used e.g. Dell Rack Mount Servers. Google was considerate enough to allow the incorporation of this use-case by providing an alternative flavour to Anthos; Anthos on Bare Metal.

Google has been the front-runner in developing Kubernetes related technologies. This comes as no surprise as Kubernetes was originally developed by Google engineers, who were the early contributors to the Linux container technology.

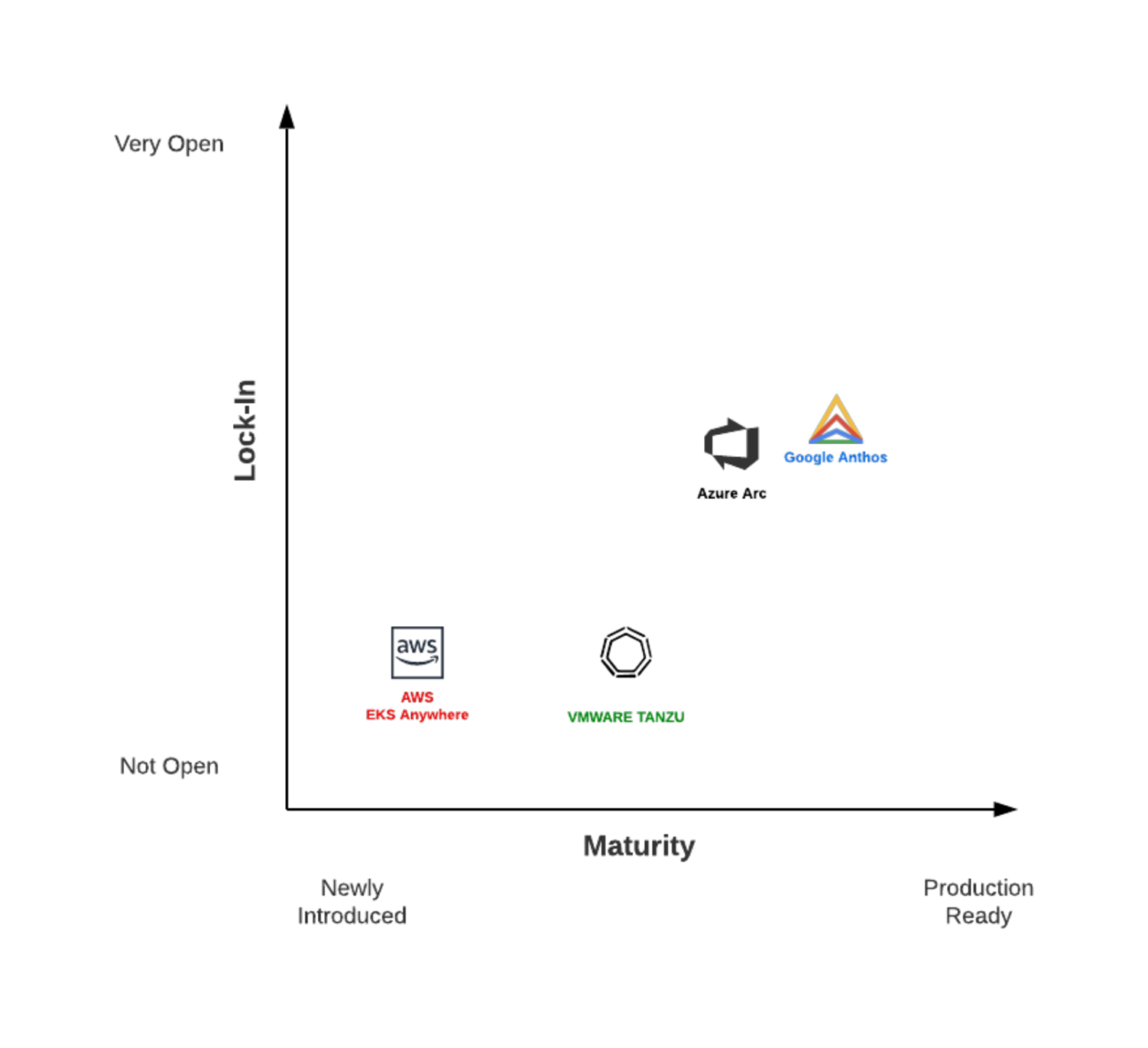

While Google may be the godfather of Kubernetes, the release of the Anthos Google Service prompted other Cloud Providers such as AWS and Microsoft Azure to follow suit, although their technology still lacks maturity in comparison to Anthos at the time of writing.

The chart below shows a high-level overview of some of the cloud providers who offer similar service to Anthos e.g. EKS Anywhere. Currently being in a far more mature and production-ready stage, Google, as you can see below, is the pace-setter with its Anthos product.

Diving into Anthos

Business Benefits & Cost Savings

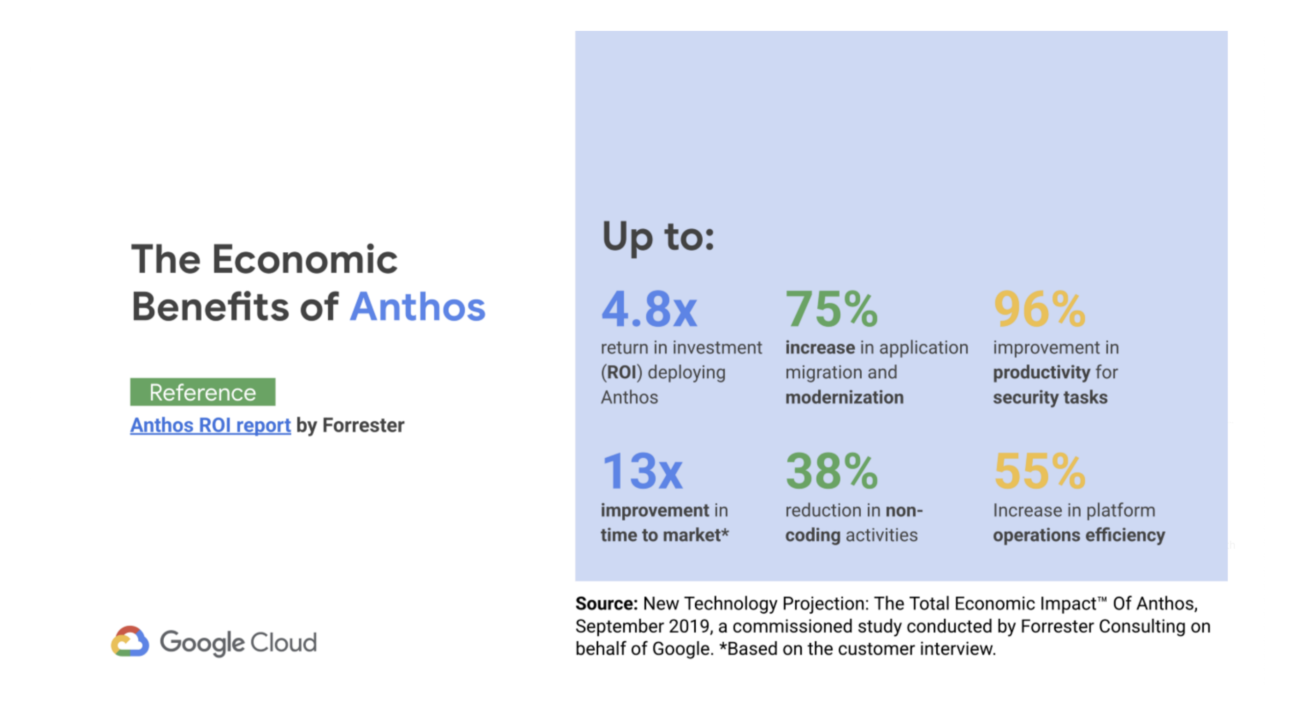

In November 2019 Forrester conducted an analysis on the projected business benefits and cost savings enabled by Anthos on behalf of Google.

The diagram below was taken from Forrester’s consultation report which clearly shows a significant improvement when adopting Anthos with a reduction of 38% in non-coding activities, 13 times increase in regards to pushing products and services to production and a major 55% increase in platform efficiencies.

Pricing

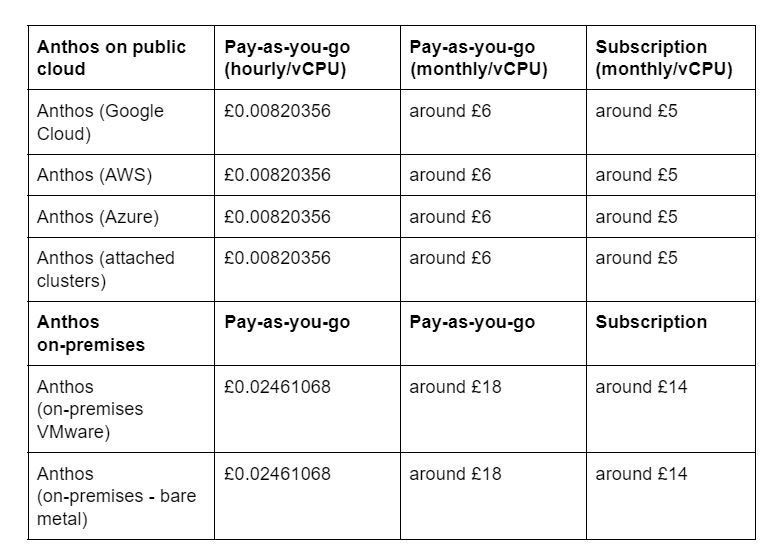

So far, you’ve seen a brief review of the non-financial benefits and cost-saving benefits of using Anthos from a business point-of-view, but what are the actual costs of using Anthos?

Anthos charges on an hourly basis and the calculation is based on the number of Anthos cluster vCPU. A vCPU is considered to be ‘under management’ when it is seen by the Anthos Control Plane as schedulable compute capacity.

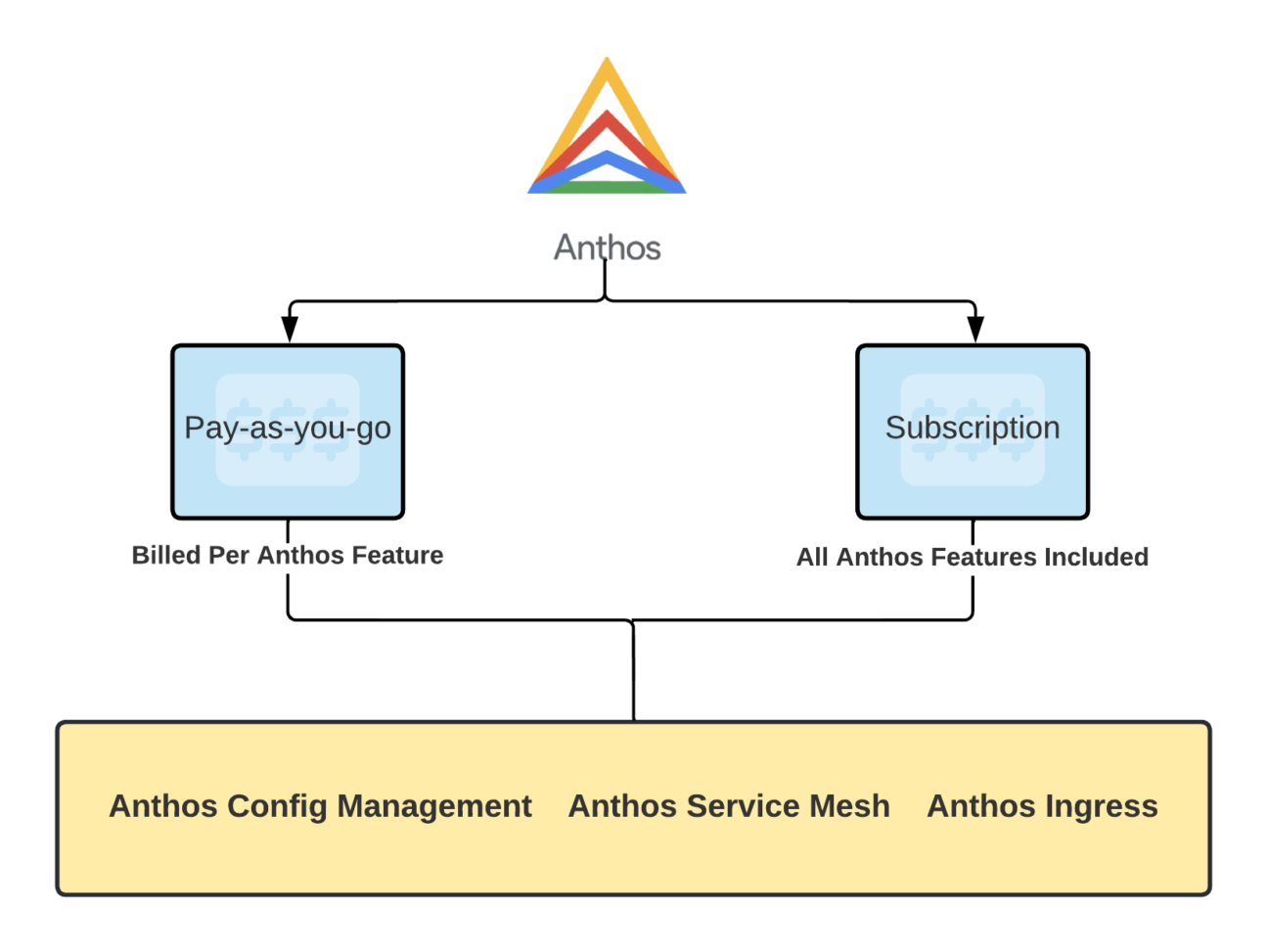

Anthos contain 2 types of pricing models:

1. Pay-as-you-go – Where you are billed for Anthos-managed clusters as you use them.

2. Subscription – Provides a discounted price for a committed term. Your monthly subscription covers all Anthos deployments, irrespective of environment, at their respective billing rates. Any usage over your monthly subscription fee will show up as overages in your monthly bill at the pay-as-you-go rates listed below (NOTE: Rates are estimates). The monthly subscription fee is not adjusted for under-usage. You can continually monitor your usage of Anthos and the cost benefits of your subscription, letting you adjust your subscription on the next renewal.

The difference between option 1 and option 2 is that Pay-as-you-go is better suited to businesses or organisations wanting to use 1 or 2 Anthos related features whilst running everything in GCP. Whereas option 2 is better suited for organisations wanting to make use of ALL Anthos native features.

To understand the vCPU value used for Anthos billing on each cluster. A simple command below can be used:

kubectl get nodes -o=jsonpath=”{range .items[*]}{.metadata.name}{\”\t\”} \

{.status.capacity.cpu}{\”\n\”}{end}”

A brief introduction to Anthos

Earlier in this blog post I discussed the history of Anthos, how it came about, its competitors and its business-related benefits, but you may still be asking; what exactly is Anthos?

Well in short, Anthos is a modern application management platform that provides a unified yet consistent development and operations model for computing, networking and service management across different environments such as; AWS, GCP, Microsoft Azure, on-prem VMWare and physical bare-metal servers.

In even shorter terms, Anthos is a platform based on the Kubernetes technology that Google developed and it can be hosted anywhere and everywhere.

A simple scenario is a client using both GCP and AWS as their cloud provider. They would easily be able to host workloads on a Google GKE Cluster on AWS using AWS EC2 compute instances as its control plane and worker nodes. This, in some cases, can benefit the client on cost savings for hosting the cluster, as some AWS EC2 instances may well be cheaper in comparison to GCP Compute Engine instances.

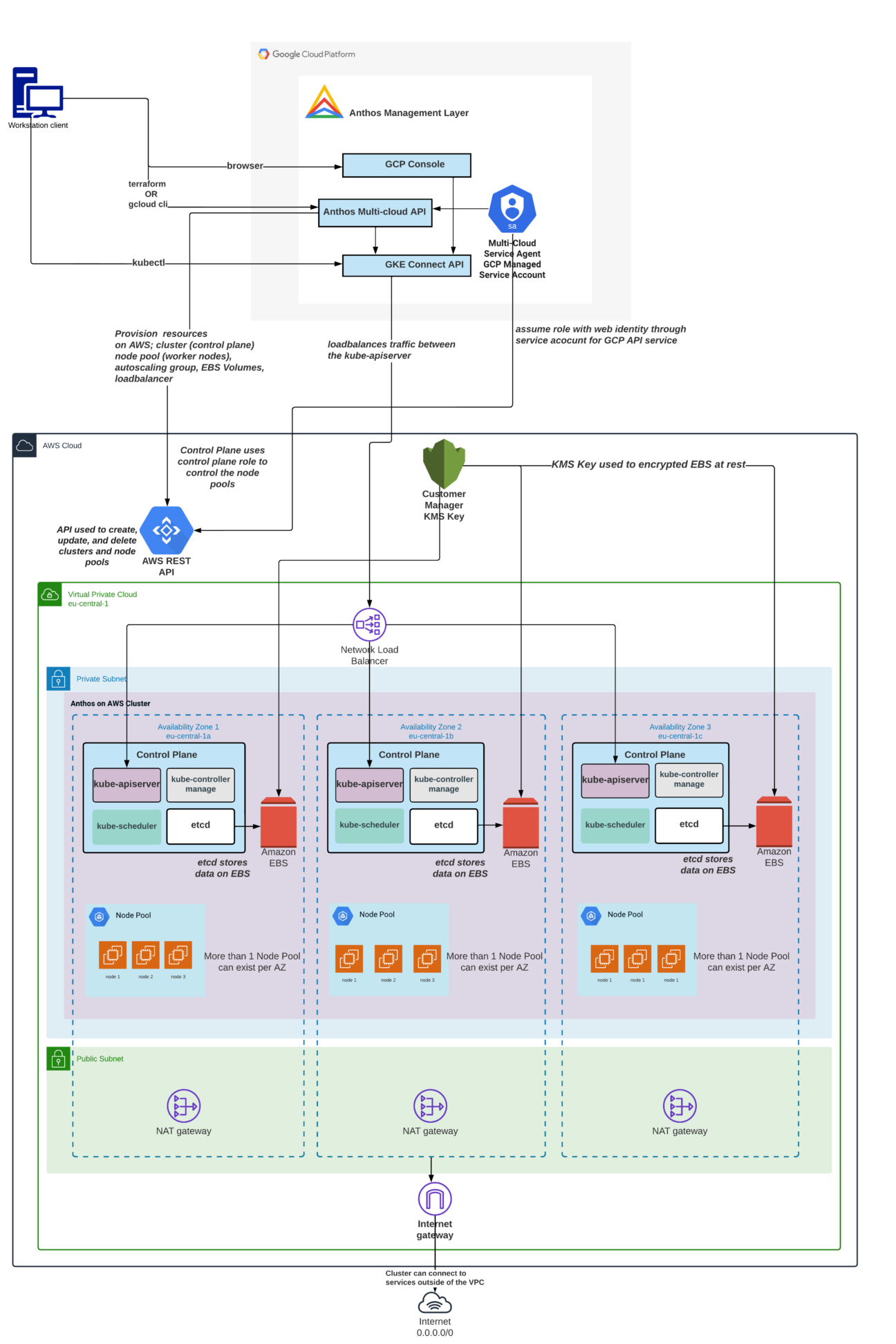

The above high-level architecture diagram represents the Anthos flavour Anthos on AWS.

Let’s take a little technical dive into how Anthos on AWS can be set up and how it works in the background.

Anthos on AWS can be set up via 3 approaches:

1. Terraform

2. Google Cloud SDK (gcloud CLI)

3. Manually through the GCP console

Given that the user is using Terraform to provision Anthos on AWS, communication between the Google Anthos Multi-cloud API and the AWS Rest API is required. Luckily this communication is authenticated and set up via a GCP Managed Service Account. This provides the relevant permissions between the two platforms for provisioning AWS related resources, and for managing the Anthos on AWS cluster where the API is used to create, update and delete clusters and node pools.

Looking at the diagram, you can see a Control Plane is sitting on each Availability Zone within a subnet on an AWS VPC. This is intended as a highly available mechanism for the cluster to ensure it is ALWAYS reachable even when an AWS availability zone or control plane node becomes unavailable.

If you’re already familiar with the concept of Kubernetes, the importance of the control plane (master node) cannot be stressed enough. This is because a control plane contains all default Kubernetes related services such as;

- Kube-apiserver

- Kube-controller manager

- Kube-scheduler

- Etcd key-value store

Without these Kubernetes services running within a cluster, the cluster will essentially be decommissioned and unuseable.

For a deep dive into Kubernetes, please refer to the Kubernetes Official Documentation.

Further down the Fig 5 diagram, you can see Node Pools under each Control Plane node.

Node Pools are a group of Kubernetes worker nodes with the exact same configuration as one another. Since nodes in a Node Pool run on the same subnet, to achieve high availability, you MUST provision multiple Node Pools across different subnets within the same AWS Region – similar to the architecture diagram.

Lastly, you can see NAT gateway and an Internet Gateway as part of the public subnet, this provides the cluster with internet access allowing the cluster to be accessible from outside the AWS VPC. For example, running a kubectl get nodes against the cluster to retrieve the cluster nodes.

What about logging and monitoring?

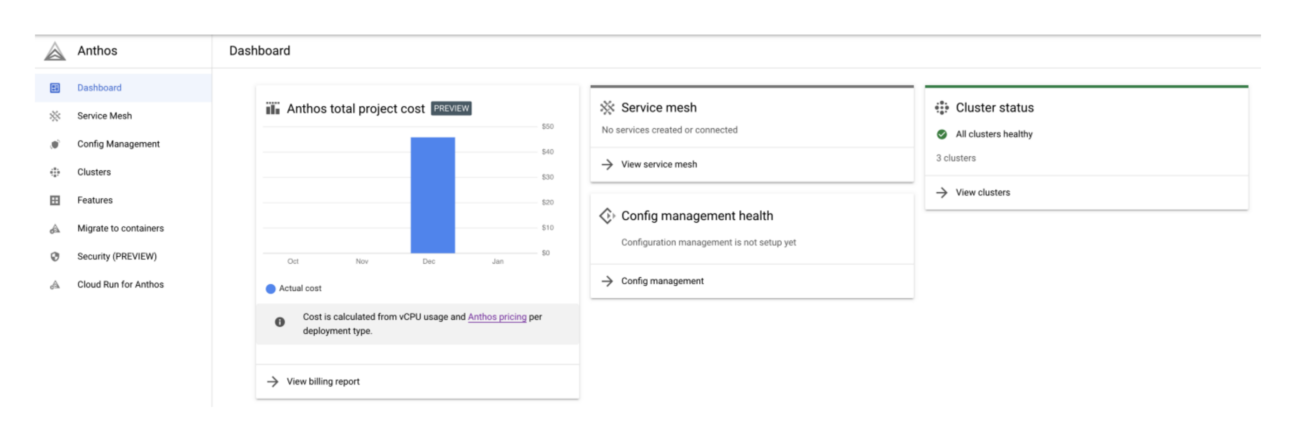

When you manage to spin up a working Anthos on an AWS cluster, you can generate a GCP Bearer Token and register the cluster via the GCP Anthos console. Through the dashboard, you will be able to view cluster related information and the Anthos features used as part of that cluster, for example, Anthos Config Management.

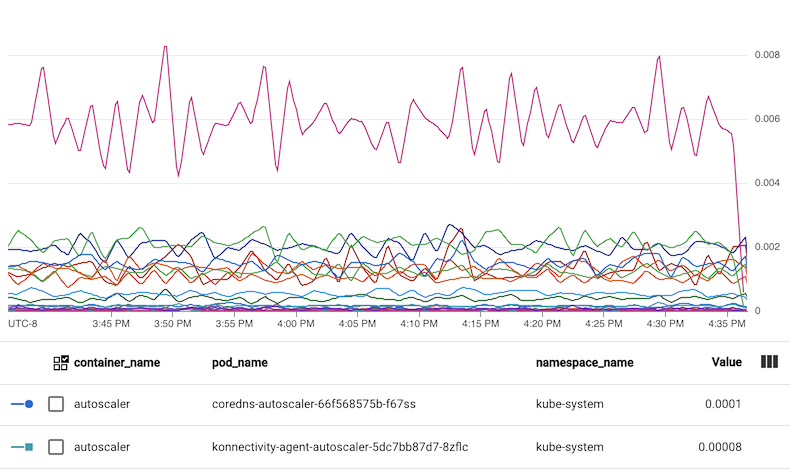

There is good news in regards to logging and monitoring Anthos clusters on AWS are already integrated with Cloud Monitoring. The GCP native service scrapes metric data from nodes, pods and workload containers. This integration allows you to quickly see resource consumption of workloads in the cluster and build dashboards with threshold alerts.

When the Anthos on AWS cluster is created, a metrics collector gke-metrics-agent is automatically deployed as part of the cluster. This agent is based on the OpenTelemetry Collector and runs on every node in the cluster as a daemonset. This metric collector samples metrics every minute and pushes the measurements to the GCP Cloud Monitoring dashboard to be viewed by the user.

Last but not least, Anthos on AWS clusters also support Cloud Logging for all system components running on both Control Plane and Worker Nodes, which includes:

- Logs for system components on each of the control plane replicas.

- Logs for system services on each of the node pool nodes

And the stand-out Anthos Feature?

Anthos Config Management is particularly useful for scenarios where companies are expanding their development and production clusters constantly.

ACM is used to create and enforce consistent configurations and security policies across the ever-growing environment. ACM achieves this by enabling platform operators to automatically deploy shared environment configurations and enforce approved security policies across Kubernetes clusters on-premises, natively on GCP, and in other cloud platforms such as AWS and Microsoft Azure.

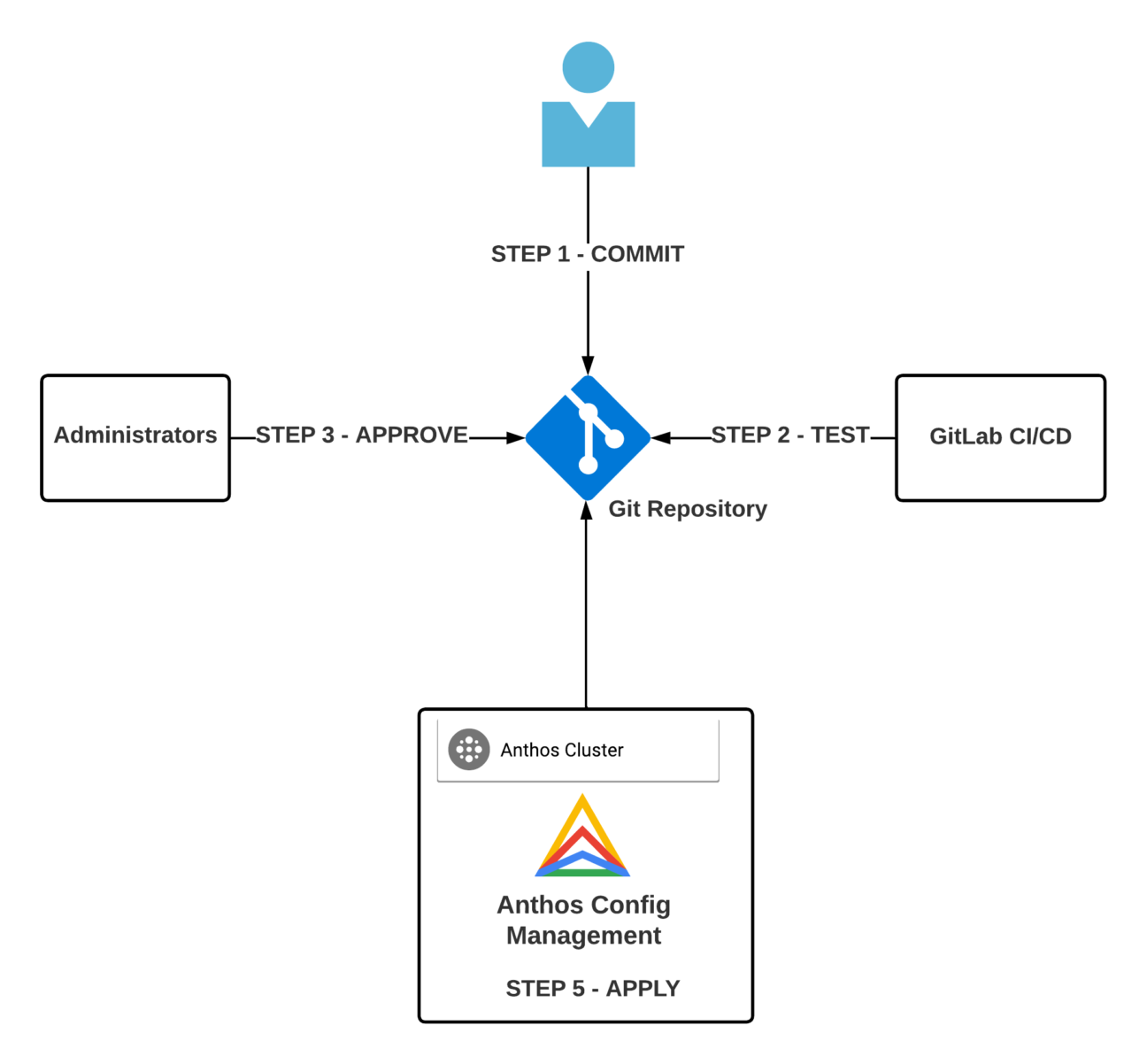

The use of ACM allows us to follow a strict GitOps approach; for example when a new commit for a Kubernetes Service Account configuration file is pushed to a Gitlab repo, that Service Account will automatically be created on the cluster. Since ACM follows a GitOps approach, any Gitlab commit changes made to that particular Service Account file will be applied to the cluster.

The downside of Anthos

We have discussed the many great benefits of using Anthos, however, when there are pros there are also cons.

What we discovered after setting up and having some time to test out this Google service, is the lack of customizability at the pre-cluster build stage. For example – the inability to select another logging or monitoring service as everything comes default “out of the box”.

Another downside which I hope Google will improve on, are the differing setup approaches between certain Anthos features based on the cluster type e.g. user type Anthos cluster or standalone type Anthos cluster.

If you’re running a user cluster, ACM installation for example is only possible through the GCP console, whereas, for standalone clusters, you have the option to install ACM manually through kubectl commands, which in my opinion provides the user with much more control over the ACM setup.

The inconsistency described above shows that Anthos still has more room to improve and mature, which is understandable given their short time on the market.

Is it worth going to Anthos?

Although Anthos is a fairly new technology, released only back in 2019, Google has made major strides in the world of hybrid cloud management. This was highlighted when Anthos’s support for AWS and Microsoft Azure was released in 2020 and 2021 respectively.

Google’s major cloud competitor AWS and Microsoft have also joined the party, although their product is in a less mature stage in comparison to Anthos.

Furthermore, Anthos contains Stackdriver for observability, GCP Cloud Interconnect for high-speed connectivity, Anthos Service Mesh (based on Google’s open-source Istio project), Cloud Run serverless deployment service, Google Cloud and Anthos Config Management. It can be seen that Google is trying to be “all-inclusive” for managing Kubernetes workloads regardless of where they reside.

With these features, it is especially beneficial for organisations or businesses who are running workloads on multiple cloud platforms such as AWS and Azure. Anthos can manage and host these workloads via GCP Anthos in a far more centralised and manageable environment, especially in a continuously growing environment.

Plus, the economic benefits simply cannot be ignored. Forrester’s consultation report carried out on behalf of Google highlighted a reduction of 38% in non-coding activities, 13 times increase in regards to pushing products and services to production and a further 55% increase in platform efficiencies.

In conclusion – and quoting from one of Google’s customers presented in Forrester’s report:

“Anthos is going to drive better security. It’s going to drive better policy management, so the security teams can start to build policy and code instead of having to go do manual audits and then going and looking at different things in environments. Everything has been manual up until now; the way you can now do security with Anthos is very different.”

Interested in what Anthos can do for your business?

Contact us to speak to Jolyon or one of our team of experts.